The IDS/IPS suricata has a native support for Netfilter queue. This brings IPS functionnalities to users running Suricata on Linux.

Suricata 1.1beta2 introduces a lot of new features related to the NFQ mode.

New stream inline mode

One of the main improvement of Suricata IPS mode is related with the new stream engine dedicated to inline. Victor Julien has a great blog post about it.

Multiqueue support

Suricata can now be started on multiple queue by using a comma separated list of queue identifier on the command line. The following syntax:

suricata -q 0 -q 1 -c /etc/suricata.yaml

will start a suricata listening to Netfilter queue 0 and 1.

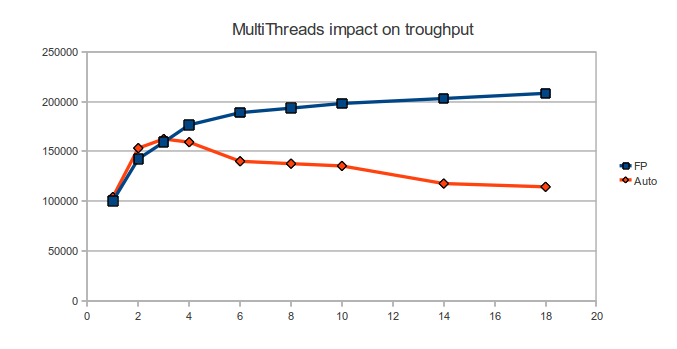

The option has been added to improve performance of Suricata in NFQ mode. One of the observed limitation is the number of packets per second that can be sent to a single queue. By being able to specify multiple queues, this is possible to increase performances. The best impact is on multicore systems where it adds scalability.

This feature can be used with the queueâ€balance option the NFQUEUE target which was added in Linux 2.6.31. When using −−queue−balance x:x+n instead of −−queue−num, packets are then balanced across the given queues and packets belonging to the same connection are put into the same nfqueue.

NFQ mode setting

Presentation

One of the difficulty of IPS usage is to build an adapted firewall ruleset. Following my blog post on this issue, I’ve decided to implement most of the existing mode in Suricata.

When running in NFQ inline mode, it is possible now to use a simulated non-terminal NFQUEUE verdict by using the ‘repeat’ mode.

This permit to send all needed packet to suricata via this a rule:

iptables -I FORWARD -m mark ! --mark $MARK/$MASK -j NFQUEUE

And below, you can have your standard filtering ruleset.

Configuration and other details

To activate this mode, you need to set mode to ‘repeat’ in the nfq section.

nfq:

mode: repeat

repeat_mark: 1

repeat_mask: 1

This option uses the NF_REPEAT verdict instead of using a standard NF_ACCEPT verdict. The effect is that the packet is sent back at the start of the table where the queuing decision has been taken. As Suricata has put on the packet the mark $repeat_mark with respect to the mask $repeat_mask, when the packet reaches again the iptables NFQUEUE rules it does not match anymore because of the mark and the ruleset floowing this rule is used.

The ‘mode’ option in nfq section can have two other values:

- accept (which is default simple mode)

- route (which is little bit tricky)

The idea behind the route option is that a program using NFQ can issues a verdict that has for effect to send the packet to an another queue. This is thus possible to chain softwares using NFQ.

To activate this option, you have to use the following syntax:

nfq:

mode: route

route_queue: 2

There is not much usage of this option. One mad mind could think at chaining suricata and snort in IPS mode 😉

The nfq_set_mark keyword

Effect and usage of nfq_set_mark

A new keyword nfq_set_mark has been added to the rules option.

This is an enhancement of the NFQ mode. If a packet matches a rule using nfq_set_mark in NFQ mode, it is marked with the mark/mask specified in the option during the verdict.

The usage is really simple. In any rules, you can add the nfq_set_mark keyword to specify the mark to put on a packet and what is the the

pass tcp any any -> any 80 (msg:"TCP port 80"; sid:91000004; nfq_set_mark:0x80/0x80; rev:1;)

That’s great but what can I do with that ?

If you are not familiar with the advanced network capabilities, you may wondering how it can be used. Nefilter mark can be used to modify the handling of a packet by the network stack, ie routing, quality of service, Netfilter. Thus, the concept is the following, you can use Suricata to detect a suspect packet and decide to change the way it is handled by the network stack.

Thanks to the CONNMARK target, you can modify the way all packets of the connection are handled.

Among the possibilities:

- Degrade QoS for a connection when a suspect packet has been seen

- Trigger Netfilter logging of all subsequent packets of the connection (logging could be done in pcap for instance)

- Change routing to send the trafic to a dedicated equipment