Powerline

Powerline is a status extension software changing the prompt or status line for shell, tmux and vim.

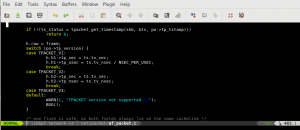

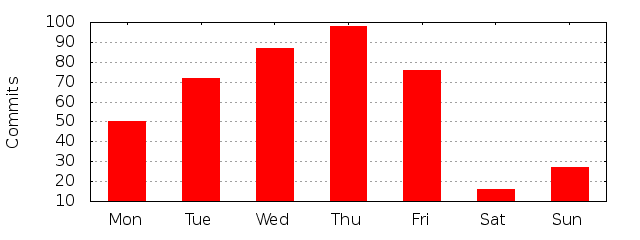

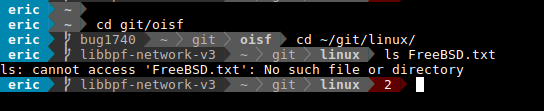

The result is nice looking and useful for bash:

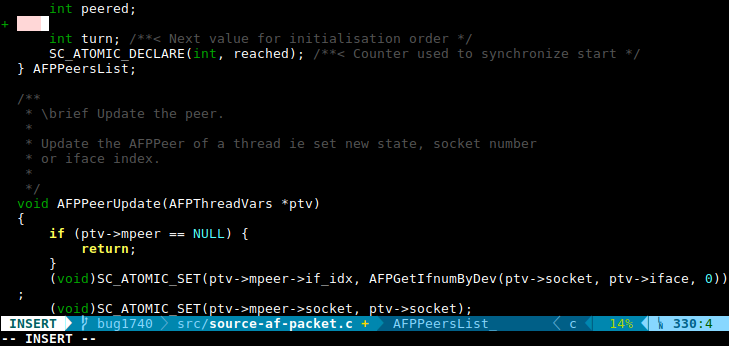

and for gvim:

Only point is that even if documentation is good, installation is not straightforward. So here’s what I’ve done.

Installation on Debian

System

sudo aptitude install fonts-powerline powerline python-powerline

On Ubuntu 16.04 you may have to install python3-powerline instead of python-powerline.

Install configuration

mkdir ~/.config/powerline cp /usr/share/powerline/config_files/config.json .config/powerline/

Then edit the file to change default theme to default_leftonly that bring git status:

--- /usr/share/powerline/config_files/config.json 2016-07-13 23:43:25.000000000 +0200

+++ .config/powerline/config.json 2016-09-14 00:05:04.368654864 +0200

@@ -18,7 +18,7 @@

},

"shell": {

"colorscheme": "default",

- "theme": "default",

+ "theme": "default_leftonly",

"local_themes": {

"continuation": "continuation",

"select": "select"

Now, you need to refresh the fonts or restart X.

Bash

Then edit ~/.bashrc and add at then end

. /usr/share/powerline/bindings/bash/powerline.sh

Vim

Easiest way is to have vim addon installed:

sudo aptitude install vim-addon-manager

Then you can simply do:

vim-addons install powerline

Then add to your ~/.vimrc:

set laststatus=2

gvim

Installation is a bit more complex as you need to install a patched font from Powerline modified fonts.

In my case:

mkdir ~/.fonts cd ~/.fonts wget 'https://github.com/powerline/fonts/raw/master/DejaVuSansMono/DejaVu%20Sans%20Mono%20for%20Powerline.ttf' fc-cache -vf ~/.fonts/

Then edit .vimrc:

set guifont=DejaVu\ Sans\ Mono\ for\ Powerline\ 10