Discovering the performance boost

When doing some coding on both 1.0 and 1.1 branch of suricata, I’ve remarked that there was a huge performance improvement of the 1.1 branch over the 1.0 branch. The parsing of a given real-life pcap file was taking 200 seconds with 1.0 but only 30 seconds with 1.1. This performance boost was huge and I decide to double check and to study how such a performance boost was possible and how it was obtained:

A git bisection shows me that the performance improvement was done in at least two main steps. I then decide to do a more systematic study on the performance improvement by iterating over the revision and by each time running the same test with the same basic and not tuned configuration:

suricata -c ~eric/builds/suricata/etc/suricata.yaml -r benches/sandnet.pcap

and storing the log output.

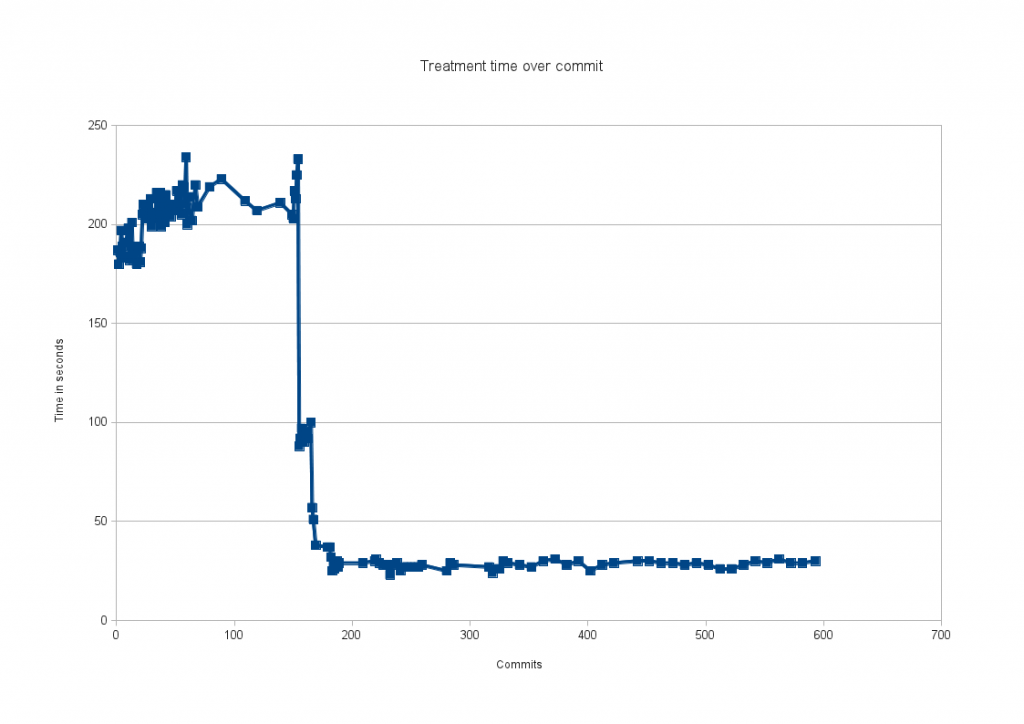

Graphing the improvements

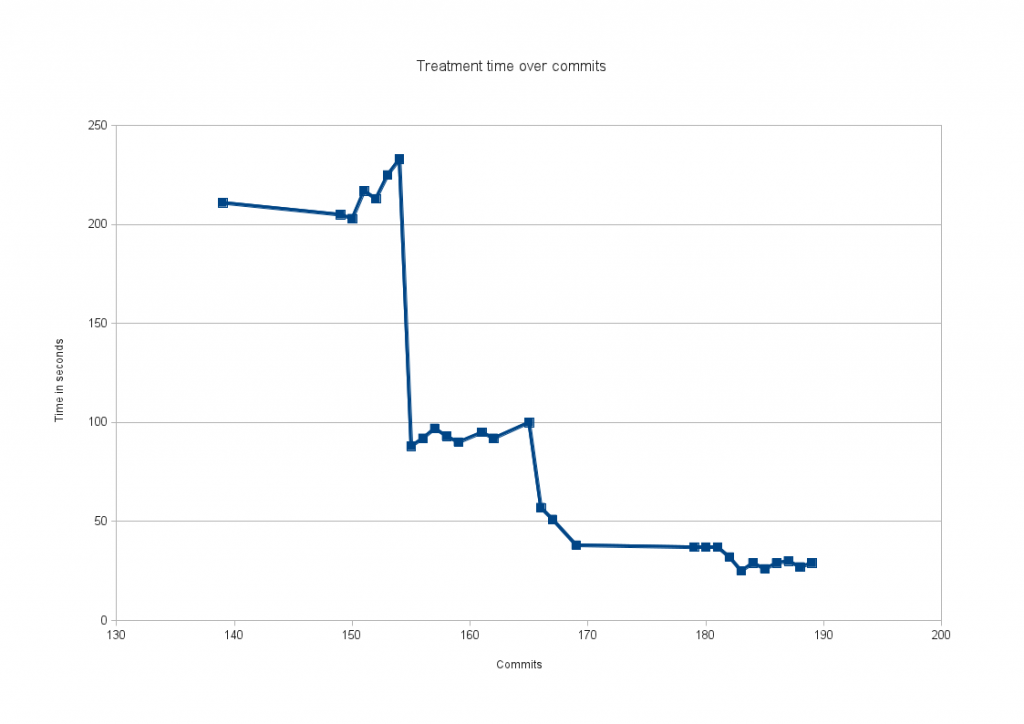

The following graph shows the evolution of treatment time by commits between suricata 1.0.2 and suricata 1.1beta2:

It is impressive to see that improvements are located over a really short period. In term of commit date, almost everything has happened between the December 1th and December 9th.

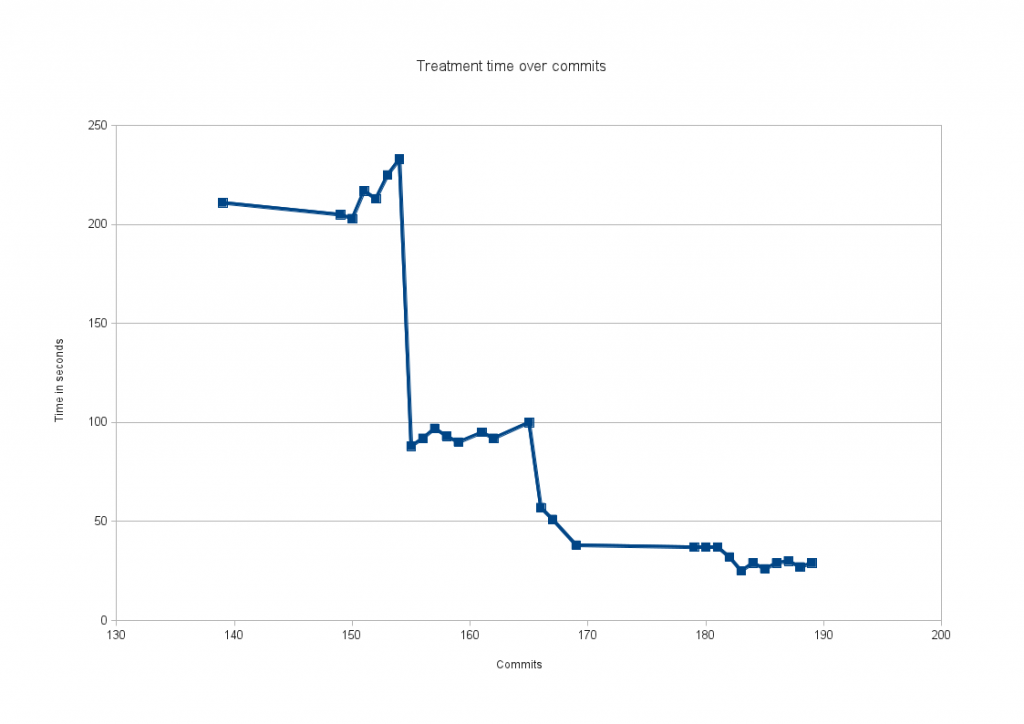

The following graph shows the same data with a zoom on the critical period:

One can see that there is two big steps and a last less noticeable phase.

Identifiying the commits

The first big step in the improvement is due to commit c61c68fd:

commit c61c68fd365bf2274325bb77c8092dfd20f6ca87

Author: Anoop Saldanha

Date: Wed Dec 1 13:50:34 2010 +0530

mpm and fast pattern support for http_header. Also support relative modifiers for http_header

This commit has more than double previous performance.

The second step is commit which also double performance. It is again by Anoop Saldanha:

commit 72b0fcf4197761292342254e07a8284ba04169f0

Author: Anoop Saldanha

Date: Tue Dec 7 16:22:59 2010 +0530

modify detection engine to carry out uri mpm run before build match array if alproto is http and if sgh has atleast one sig with uri mpm set

Other improvements were made a few hours later by Anoop who succeeded in a 20% improvements with:

commit b140ed1c9c395d7014564ce077e4dd3b4ae5304e

Author: Anoop Saldanha

Date: Tue Dec 7 19:22:06 2010 +0530

modify detection engine to run hhd mpm before building the match array

The motivation of this development was the fact that the developers were knowing that the match on http_headers was not optimal because it was using a single pattern search algorithm. By switching to a multi-pattern match algorithm, they know it will do a great prefilter job and increase the speed. Here’s the quote of Victor Julien’s comment and explanation:

We knew that at the time we inspected the http_headers and a few other buffers for each potential signature match over and over again using a single pattern search algorithm. We already knew this was inefficient, so moving to a multi-pattern match algorithm that would prefilter the signatures made a lot of sense even without benching it.

Finaly two days later, there is a serie of two commits which brings a other 20-30% improvements :

commit 8bd6a38318838632b019171b9710b217771e4133

Author: Anoop Saldanha

Date: Thu Dec 9 17:37:34 2010 +0530

support relative pcre for http header. All pcre processing for http header moved to hhd engine

commit 2b781f00d7ec118690b0e94405d80f0ff918c871

Author: Anoop Saldanha

Date: Thu Dec 9 12:33:40 2010 +0530

support relative pcre for client body. All pcre processing for client body moved to hcbd engine

Conclusion

It appears that all the improvements are linked with modifications on the HTTP handling. Working hard on improving HTTP feature has lead to an impressive performance boost. Thanks a lot to Anoop for this awesome work. As HTTP is now most of the trafic on internet this is a really good news for suricata users !