Introduction

Some times ago, I’ve blogged about new IPS features in Suricata 1.1 and did not find at the time

any killer application of the nfq_set_mark keyword. When using Suricata in Netfilter IPS mode, this keyword allows you to set the Netfilter mark on the packet when a rule match.

This mark can be used by Netfilter or by other network subsystem to differentiate the treatment to apply to the packet.

It takes some time but I’ve found the idea. People around me know I don’t like Microsoft Office. One of the main reason, is that this is as awful as LibreOffice or OpenOffice.org but, on top of that, you’ve got pay for it. And, even if I don’t consider that people sending MS-Word file on the network should pay for that, I think they should at least benefit from a really slow bandwidth. I thus decided to implement this with Suricata and Netfilter.

If I’ve already told you that we will use the nfq_set_mark to mark the packet, one big task remains: how can I detect when someone upload a MS-Word file ? The answer is in the file extraction capabilities of Suricata. My preferred IDS/IPS is able to inspect HTTP traffic and to extract files that are uploaded or downloaded. You can then access to information about them. One of the new keyword is filemagic which return the same output as if you had run the unix file command on the file.

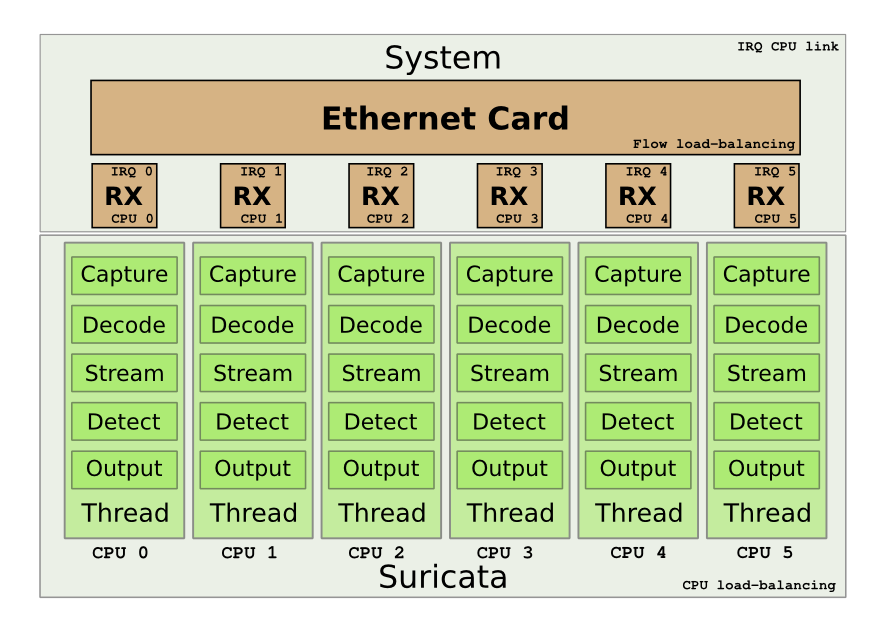

The setup

Now everything is in place. We just need to:

- Write a signatures to mark packet relative to upload

- Set up Suricata as IPS

- Set up Netfilter to send all HTTP traffic to Suricata

The signature is really simple. We want HTTP traffic and a transferred file which is of type “Composite Document File V2 Document”. When this is the case, we alert and set the mark to 1:

alert http any any -> any any (msg:"Microsoft Word upload"; \

nfq_set_mark:0x1/0x1; \

filemagic:"Composite Document File V2 Document"; \

sid:666Â ; rev:1;)

Let suppose we’ve saved this signature into word.rules. Now we can start suricata with:

suricata -q 0 -S word.rules

This is cool, we now have a single packet that will be marked. But, as we want to slow down all the connection, we need to propagate the mark. We call Netfilter to the rescue and our friend here is CONNMARK that will transfer the mark to all packets:

iptables -A PREROUTING -t mangle -j CONNMARK --restore-mark iptables -A POSTROUTING -t mangle -j CONNMARK --save-mark

If we are masochistic enough to try to slow yourself down, we need to add the following line to take care of OUTPUT packets:

iptables -A OUTPUT -t mangle -j CONNMARK --restore-mark

Now that the marking is done, let’s suppose that eth0 is the interface to Internet. In that case, we can setup the Quality of Service.

We create a HTB disc and create a 1kbps class for our MS-Word uploader:

tc qdisc add dev eth0 root handle 1: htb default 0 tc class add dev eth0 parent 1: classid 1:1 htb rate 1kbps ceil 1kbps

Now, we can do the link with our packet marking by assigning packet of handle 1 fw to the 1kpbs class (flowid 1:1):

tc filter add dev eth0 parent 1: protocol ip prio 1 handle 1 fw flowid 1:1

The job is almost done, last step is to send the port 80 packet to suricata:

iptables -I FORWARD -p tcp –dport 80 -j NFQUEUE iptables -I FORWARD -p tcp –sport 80 -j NFQUEUE

If we are sadistic, we can also treat the local traffic:

iptables -I OUTPUT -p tcp –dport 80 -j NFQUEUE iptables -I INPUT -p tcp –sport 80 -j NFQUEUE

That’s all with that setup, all MS-Word uploader share a 1kbps bandwith.

Effort should pay

Even among the MS-Word users, there is some people with brain and they will try to escape the MS-Word curse by changing the extension of their document before uploading them. Effort must pay, so

we will change the setup to provide the 10kbps for uploading. To do so, we add a signature to word.rules that will detect when a MS-Word file is uploaded with a changed extension:

alert http any any -> any any (msg:"Tricky Microsoft Word upload";

nfq_set_mark:0x2/0x2; \

fileext:!"doc"; \

filemagic:"Composite Document File V2 Document";

filestore;

sid:667; rev:1;)

End of the task will be to send packet with mark 2 on a privileged pipe:

tc class add dev eth0 parent 1: classid 1:2 htb rate 10kbps ceil 10kbps tc filter add dev eth0 parent 1: protocol ip prio 1 handle 2 fw flowid 1:2

I’m sure a lot of you think we have been to kind and that the little cheaters must be watch over. We’ve already used the filestore keyword in their signature to put the uploaded file on disk but that is not enough.

Keep an eye on cheaters

The traffic of the cheater must be watch over. To do so, we will send all the packets they exchange inside a pcap file. We will need multiple tools to do so. The first of them will be ipset that we will use to maintain a list of bad guys. And we will use ulogd to store their traffic into a pcap file.

To create the blacklist, we will just do:

ipset create cheaters hash:ip timeout 3600

iptables -A POSTROUTING -t mangle -m mark \

--mark 0x2/0x2 \

-j SET --add-set cheaters src --exists

The first line creates a set named cheaters that will contains IP. Every element of the set will stay for 1 hour in the set. The second line send all source IP that send packet which got the mark 2. If the IP is already in the set, it will see its timeout reset to 3600 thanks to the –exists option.

Now we will ask Nefilter to log all traffic of IP of the cheaters set:

iptables -A PREROUTING -t raw \

-m set --match-set cheaters src,dst \

-j NFLOG --nflog-group 1

The last step is to use ulogd to store the traffic in a pcap file. We need to modify a standard ulogd.conf to have the following lines not commented:

plugin="/home/eric/builds/ulogd/lib/ulogd/ulogd_output_PCAP.so" stack=log2:NFLOG,base1:BASE,pcap1:PCAP

Now, we can start ulogd:

ulogd -c ulogd.conf

A PCAP file named /var/log/ulogd.pcap will be created and will contain all the packets of cheaters.

Conclusion

It has been hard. We’ve fight a lot. We’ve coded a lot. But now, they are done! We’re able to know more about them than a Facebook admin does!